= ASTRONAUTICAL EVOLUTION =

Issue 140, 7 June 2018 – 49th Apollo Anniversary Year

| Site home | Chronological index | About AE |

I, Starship

A talk presented to the Initiative for Interstellar Studies,

The Bone Mill, Charfield, Gloucestershire, UK

Friday 1 June 2018

A strategic goal for humanity on Earth and in space in 2061

A couple of years ago I wrote a post with this title. I suggested a number of aspirational goals we should be aiming at in space, and offered them as an inspiring way to present spaceflight to schoolchildren, particularly during and after Tim Peake’s flight to the ISS. I said we should be talking about large-scale passenger spaceflight around the Earth-Moon system, the beginnings of a space economy using the resources of the Moon and the near-Earth asteroids, manned flights to Mars, self-sufficient cities on Earth with sustainable solar and nuclear energy, a growing global culture with a astronautical perspective on our place in the universe, and the first interstellar probes departing to the nearest stars.

But looking back at it now, I see this is missing something. Specific goals such as these pale into insignificance in comparison with the question of what we ourselves will have become. If it happens as advertised, artificial intelligence will fundamentally change the nature of human personality, politics, technology and civilisation. This is what we need to be thinking about now.

I, Starship – Scenarios for the brains that control a spacefaring civilisation

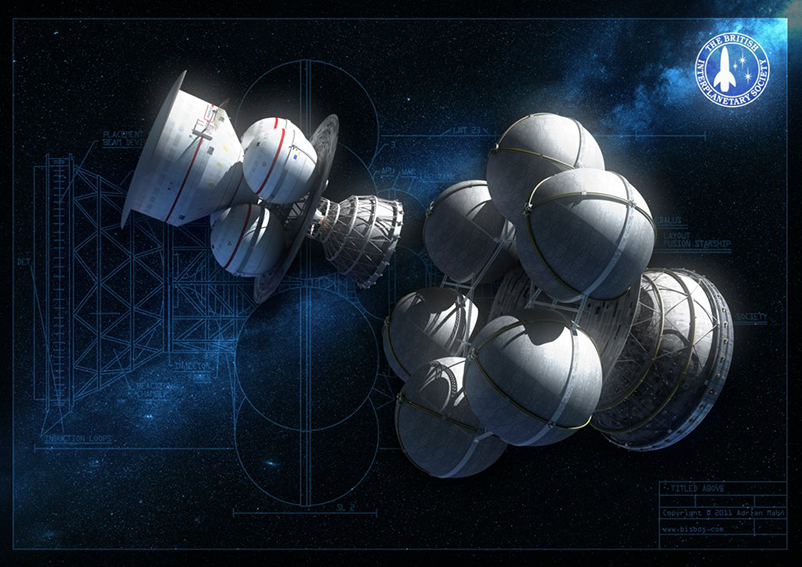

We know already that any interstellar vehicle will have a high level of autonomy. Here’s a vision as to what they might be like:

“Cassandra […] felt excitement. She had been caged in a lunar laboratory for over twenty years as the humans had perfected her circuitry and experimented with her neural networks. Now she was free, herself embedded within the metallic spacecraft that now formed the flesh and bones surrounding her computational heart. […] But to her the emotions she felt were real. These were built into her so she could better communicate in her transmissions the discoveries she would make, as though a human were observing the same thing.”

– K.F. Long, “The Dance of Angels”, Visionary (BIS, 2014), p.69-70.

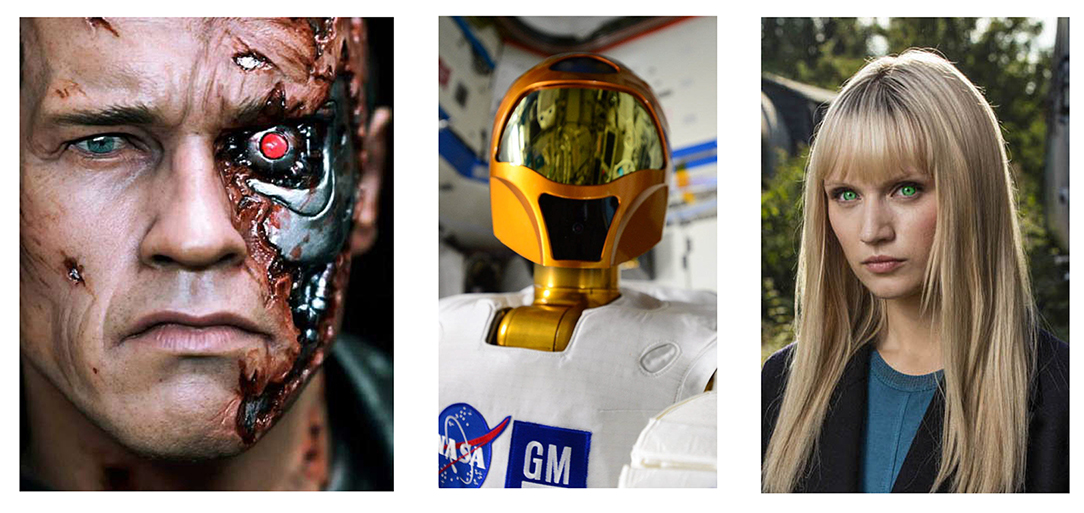

I like that quotation. I think that’s what spacecraft will be like, 50 to 100 years from now. Where technology is at present is clearly closer to some form of general artificial intelligence than it is to interstellar travel. The markets already exist for general-purpose robots able to emulate human intelligence and personality:

- Caring for the very young and the very old;

- Domestic service for the well-to-do;

- Service roles in hotels, restaurants, and so on;

- Police and emergency workers in dangerous environments.

To which in the not so distant future we can perhaps add:

- Construction workers on Mars.

When the intelligent, autonomous, humanoid, general-purpose robots demanded by these markets are combined with the single-purpose machines which are being developed to drive road vehicles and perform other specific tasks, the progression to a spacecraft which thinks in the same manner as a human personality seems to be a logical outcome. But what does that say about the nature of the spacecraft-building civilisation from which that spacecraft comes?

At some point in the future we will be ready to launch capable interstellar probes, by which I mean ones capable of exploring exoplanets with the full range of orbiters, landers and sub-surface divers and burrowers needed to characterise in detail those planets’ geology and, if they have any, their biology. Such capability is necessary to answer the age-old questions about life in the universe. But by this time, will the main decision-making centres of our civilisation still be biological, controlled by human brains? Or will they be digital? Or some mixture of the two?

In 1993 Vernor Vinge predicted that a singularity in human affairs would occur when robot intelligence surpassed human intelligence such that humans were no longer the dominant intelligent species on the planet. He expected this to happen after about thirty years, thus in the early 2020s: “Shortly after”, he wrote, “the human era will be ended.” J. Storrs Hall places human-equivalent robots in the mid-2030s, Ray Kurzweil predicted the year 2029. Is this hype, or is it an inevitable result of the information revolution?

(J. Storrs Hall, Beyond AI: Creating the Conscience of the Machine (Prometheus, 2007), p.335, 258, 86.)

Like the question of whether alien life or civilisations exist, the future of intelligent life on Earth itself is uncharted territory. Therefore no Bayesian prior probabilities can be applied. We need to keep a range of possible scenarios in mind, and to free our minds from any preconceptions as to which scenario we personally find most plausible, or most attractive, or most morally fitting.

Let me ask some questions about artificial intelligence, questions which help to generate scenarios.

Hugo de Garis and other enthusiasts like to speculate glibly about machines with trillions of times our own mental power, machines which would be godlike relative to ourselves. But can intelligence go on increasing forever? Is there a maximum possible level of intelligence? Is there a point of brain growth beyond which the tendency towards mental illness increases faster than rational calculation? Is there a sort of Goldilocks zone for an ideal brain: not too small, not too large? Of course we don’t yet know.

Again, the enthusiasts focus on pure processing power and equate that to intelligence. But human intelligence is not just a computational problem-solving ability, but rather is combined with body and sense impressions, dreams, feelings, desires, and ethical sensibilities in its interactions with others. What then about the correlation between sheer processing-power and what we would regard as ethical enlightenment? Might that correlation be positive, or might it be negative, or might there be no necessary correlation at all? Again, we don’t yet know.

At some point in the future robots will presumably become competent to have an opinion on political policies – on international trade, immigration, taxation, resolution of military conflicts – the Middle East, Africa, Korea –, public funding versus private enterprise, interplanetary and interstellar exploration and development. But even when they are more capable than us, the robots will still suffer from being in a position similar to that of human politicians: they will have incomplete information about society and the economy, unreliable forecasting of the response of such a complex, mathematically chaotic system to perturbations, and conflicting objectives. Will the robots be in fact any better at decision-making than the fallible humans they might wish to replace?

Will there be a smooth transition from human to robot decision-making? Or will the robots be kept out of politics by law? Will democracy survive the present century, or will it be replaced by an authoritarian state run by humans or by robots? And will that be a benevolent or a tyrannical state? And then who will decide whether to devote resources to interstellar exploration, or not? Who will determine cultural attitudes to progress and technology? Until these questions have been resolved by actual practical experience, it is premature to set too much store by plans for space colonisation or interstellar travel.

So that’s enough questions for the present. Here is my selection of possible future scenarios. Let me repeat: I have my favourite scenario. Others will no doubt have their favourites. But these are not meaningful Bayesian priors. The future is open, undetermined, unknown until we get there!

Potential future scenarios

(1) Suppose that robots do not surpass human abilities at all. Obviously they already do in many specific areas – such as playing board games, planning distribution networks, designing spacecraft trajectories. Here I’m talking about the ability to function as a rounded personality, in other words, one competent to make decisions at the broadest political, economic and cultural levels, with genuine understanding of what it is doing and of the context in which it does it.

The status of robots in the first scenario stays where it is today, thus they remain subhuman. So, Scenario One: Stupid Robots.

Humans are not exactly the masters, because their decisions are constrained by the workings of the computerised economy, but at least they still feel in control. Clearly, this feeling has always been partly illusory.

Note that a commonly expressed fear of the Singularity is that a machine will display superhuman intelligence in a particular task, such as manufacturing a product, and at the same time subhuman stupidity in failing to understand the context in which its product is needed or the balance required between production of that commodity and other economic and cultural activities. Clearly, I would not call this artificial intelligence at all.

(2) Scenario Two: suppose the robots match human abilities as rounded personalities, are recognised as legal persons, but go no further and end up becoming one more nation among many. The TV series Humans now playing on Channel 4 portrays its robots, or synthetics as it calls them, in this way. The positive characters in the show are trying to liberate them to achieve this status, while the negative ones don’t recognise the synths as conscious individuals and are trying to keep them in Scenario One. In fact the show is now portraying the synthetics as something very much like illegal immigrants from some foreign shore, who are unwanted and dangerous and must be confined to prison camps or else destroyed.

So, Scenario Two: Foreign Robots.

(3) Scenario Three: the big fear is of course that robots with superhuman intelligence will also be moral imbeciles. Such robots would allow humans to continue to exist only as slaves, or toys, or as game for hunting down, or as experimental specimens. Or else they would exterminate us, perhaps as we would exterminate a nest of rats as disgusting vermin, or perhaps by simply ignoring us and taking over all our land, pushing us to the margins until we are driven to extinction.

So, Scenario Three: Nazi Robots.

(4) Scenario Four: suppose, on the other hand, that a superintelligent being can or even must display compassion to all living things? Might greater intelligence entail greater empathy? Such robots would revere humans as people in oriental cultures revere their ancestors. It would be a collaborator with humans, not a competitor. It might be a god, but a benevolent god.

So, Scenario Four: Buddhist Robots.

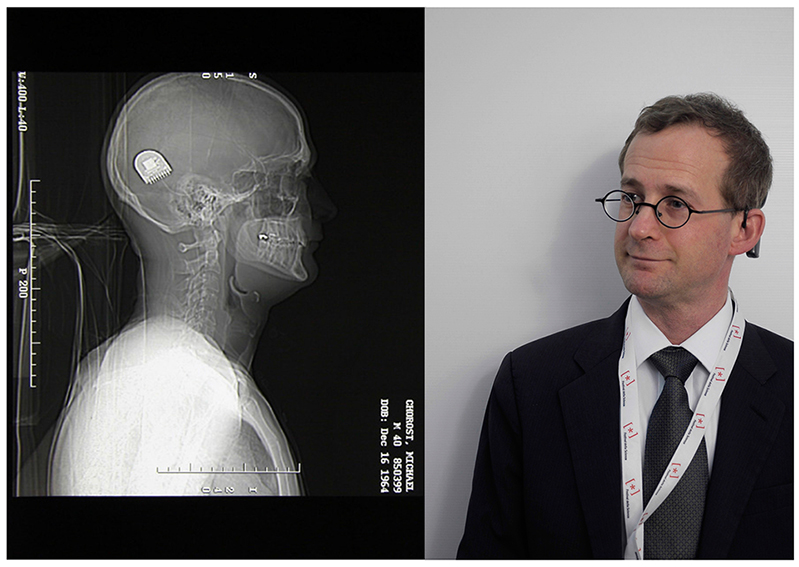

(5) Scenario Five takes a different direction, in which humans and robots merge together into organisms with a mixture of biological and technological components, and highly networked among themselves into a hive mind. Here the distinction between natural and artificial becomes increasingly blurred, until it no longer matters at all.

Scenario Five is therefore: Cyborgs.

Note that humans have always tended to turn themselves into cyborgs – augmented themselves with prostheses such as artificial limbs, pacemakers, spectacles, clothing even, and augmented themselves with tools, weapons, musical instruments, motor vehicles.

Note also that human communication with machines is on a trend of greater intimacy, from punched tape, to keyboards, to point and click, and now to direct connection between computer chips and neurons in the brain. In his book World Wide Mind, Michael Chorost describes how this works in the case of the ear implants which allow him, a deaf person, to hear again. Note the title of his first book: How Becoming Part Computer Made Me More Human. Chorost’s cochlear implant gives him a quarter of a million transistors hardwired into his skull. As he describes in his second book, this is only the beginning.

(Michael Chorost, World Wide Mind: The Coming Integration of Humanity, Machines and the Internet (Free Press, 2011).)

Persuasive as the Cyborg Scenario is, it does of course still depend upon the continued willingness of the robots to accept input from biological brains.

Also, it raises the question as to whether human consciousness can migrate from biological neurons to computer chips. If everybody uploads their conscious self to a faster, more capacious machine brain, achieving immortality in the process, will humanity as we understand it today have become extinct? Or will it have become even more human, with even more of the qualities which we value about ourselves today?

(6) And finally, just a reminder that there may be other possibilities that I’ve not thought of. So Scenario Six is a simple question mark.

Another way of addressing Scenario Six is to think of it as a combination of two or more of the preceding scenarios, which are of course not mutually exclusive. If a variety of super-intelligent robots of different capabilities and ethical outlooks are in play, things could get very complicated and messy. That, however, is left as an exercise for the science-fiction writers among us.

So, to conclude: what happens when terrestrial life finally emerges out of the cradle for good and establishes itself on the other planets and in space colonies in its home planetary system, and ultimately in exoplanetary systems? Who decides when to fly and where to? Who allocates the resources? Who goes on the voyage? And who ends up as the rulers of the Galactic Empire?

This table presents a range of possible outcomes, from continuing human control of the future, through various combinations of human and robot control and, at the other end of the spectrum, humanity extinct and robots everywhere. This is of course what Paul Davies was predicting when he wrote:

“In a million years, if humanity isn’t wiped out before that, biological intelligence will be viewed as merely the midwife of ‘real’ intelligence – the powerful, scalable, adaptable, immortal sort that is characteristic of the machine realm. […] the self-created godlike mega-brains will seek to spread across the universe. By the same token, we can expect any advanced extraterrestrial biological intelligence to long ago have transitioned to machine form.”

(Paul Davies, The Eerie Silence: Searching for Ourselves in the Universe (Penguin, 2010), p.161.)

Whether and to what extent this happens over the next few hundred years on and around Earth will shape our interplanetary and interstellar enterprises in ways which are hard to guess and impossible to predict.

Please send in comments by e-mail.

Interesting and relevant comments may be added to this page.

| Site home | Chronological index | About AE |